This is my second (or third) dalliance with AWS CodeBuild. I think I'm starting to like it now though. I recently wrote a bash script to compile a version of perl to create Lambdas as part of my Perl Lambda project. My so-called `make-a-perl` script will instantiate an EC2, download the perl source code, compile it, zip it up and copy it to an S3 bucket.

I suspected I could use AWS CodeBuild to do all of the aforementioned operations, but did not want to descend down the CodeBuild rabbit hole once more. But alas, the temptation was just too much!

Showing posts with label aws. Show all posts

Showing posts with label aws. Show all posts

Sunday, February 17, 2019

Tuesday, March 14, 2017

AWS CodeBuild - HowTo

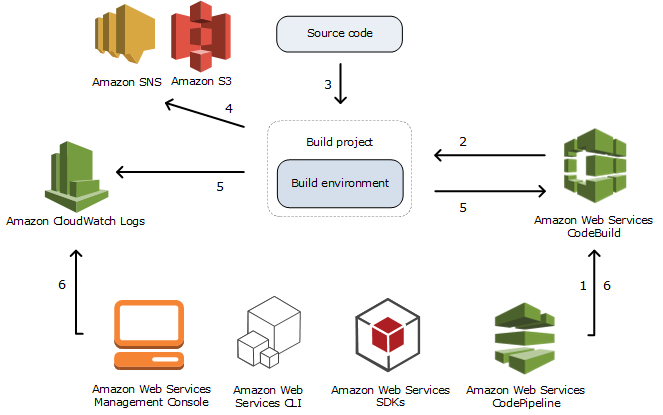

Amazon Web Services' CodeBuild is a managed service that allows developers to build projects from source.

Typically CodeBuild is used as part of your CI/CD pipeline, perhaps along with other AWS tools like CodeCommit, CodePipeline and CodeDeploy.

This blog will explore the use of CodeBuild to build the Bedrock project and update a yum repository. Along the way I'll detail some of the things I've learned and the path I took to automating the Bedrock build.

Typically CodeBuild is used as part of your CI/CD pipeline, perhaps along with other AWS tools like CodeCommit, CodePipeline and CodeDeploy.

This blog will explore the use of CodeBuild to build the Bedrock project and update a yum repository. Along the way I'll detail some of the things I've learned and the path I took to automating the Bedrock build.

Friday, December 30, 2016

Follow-up: Using AWS Simple Email Service (SES) for Inbound Mail

As I alluded to in my first post on this subject, it's possible to use AWS Lambda to process mail you receive as well. In this final post on AWS Simple Email Service, I'll show you how to forward your email using a Lambda function.

Labels:

aws,

aws lambda,

aws ses,

javascript,

serverless,

ses

Thursday, December 29, 2016

Part 2: Using AWS Simple Email Service (SES) for Inbound Mail

|

| Delete, delete, delete, delete, forward... |

In part one of my two part blog on Amazon's Simple Email Service, we set up the necessary resources to receive and process inbound email. In part two, we'll create a worker that reads an SQS queue and forwards the mail to another email address.

Sunday, December 18, 2016

Using AWS Simple Email Service (SES) for Inbound Mail

|

| Oy! Good thing no one else can see THIS message! |

In our architectures, now more than ever, it is important to reduce the surface area for attacks. That means closing down as many access points to your network as possible. SMTP running on port 25 is a gaping hole that most architects interested in securing their networks want turned off, like yesterday!

If you don't want to completely outsource your inbound mail to a managed service, AWS SES inbound email service is one way to have your cake and eat it too. It's especially useful if you want to allow your application to receive mail but you don't necessarily want or need to host an email service that includes an IMAP or POP server. You may only need to receive mail in which case AWS SES is the perfect solution. Along with a scalable managed service, SES also includes spam filtering capabilities.

In this two part blog, we'll explore setting up a simple inbound mail handler for openbedrock.net using Amazon Web Services Simple Email Service (SES).

Saturday, December 17, 2016

Using AWS S3 as a Yum Repository

In an earlier post I described how you can use an S3 bucket to host a yum repository. In this post we'll give the repository a friendlier name and create an index page that's a tad more helpful. If you're not using AWS S3 and CloudFront to host your static assets, you might want to consider looking into this simple to use solution for running a website without having to manage a webserver.

Visit http://repo.openbedrock.net to see the final product.

Visit http://repo.openbedrock.net to see the final product.

Monday, December 5, 2016

AWS re:Invent - Wrap Up

It's Friday, I'm blogging at 33,000 feet and the captain has just turned off the seat belt signs, informed us that it's 50 degrees back at our final destination, Philadelphia, Pennsylvania and directed us to just sit back and relax. I get very nervous when the captain of a plane flying at 33,000 feet tells me my final destination is Philadelphia. I'm hoping to do a few more trips to AWS re:Invent before my "final destination" arrives.

CloudBlogging (pun intended) about my 5 days at one of the most important cloud computing events each year thanks to my Acer Chromebook and my free GoGo Inflight passes. I still have 6 left of the 12 I received when I purchased the C720 nearly three years ago prior to attending my second AWS re:Invent extravaganza. Wifi at 33,000 feet is spotty but it is possible to complete a blog post on a 5 hour trip across the country.

No blog here would be complete without a shameless plug (I promise this is the only plug in the blog) for Chromebooks - #LoveMeSomeChromebook.

If you haven't read the rest of this somewhat tantalizing series on AWS re:Invent start here. I'll wrap up the blog series with a recap of the week's highlights, opine a bit and provide you with some of the key takeaways. Enjoy!

CloudBlogging (pun intended) about my 5 days at one of the most important cloud computing events each year thanks to my Acer Chromebook and my free GoGo Inflight passes. I still have 6 left of the 12 I received when I purchased the C720 nearly three years ago prior to attending my second AWS re:Invent extravaganza. Wifi at 33,000 feet is spotty but it is possible to complete a blog post on a 5 hour trip across the country.

No blog here would be complete without a shameless plug (I promise this is the only plug in the blog) for Chromebooks - #LoveMeSomeChromebook.

If you haven't read the rest of this somewhat tantalizing series on AWS re:Invent start here. I'll wrap up the blog series with a recap of the week's highlights, opine a bit and provide you with some of the key takeaways. Enjoy!

Labels:

12 factor apps,

amazon web services,

aws,

lambda,

las vegas,

re:invent,

serverless

Saturday, April 23, 2016

Creating a yum repository using AWS S3 buckets

Here's a short bash script I use to create a yum repo in an S3 bucket. The script does five (5) things:

$ aws s3 mb s3://openbedrock-repo

- creates a local repo in a temporary directory

- copies an RPM file to the local repo

- creates the yum repository using createrepo

- syncs the local directory with the S3 bucket

- sets public permissions for the bucket (make sure this is what you actually want to do)

Before you get started create the bucket and configure it to host a static website. No worries if you don't actually have an index.html.

Create the bucket:

Then configure it to host static files:

$ aws s3 website s3://openbedrock-repo --index-document index.html

Here's the bash script to create the repo:

1: #!/bin/bash

2:

3: # $ sudo $0 path-to-rpm [bucket]

4:

5: # create a temporary repo

6: repo=$(mktemp -d)

7: mkdir ${repo}/noarch

8: if test -n "$2"; then

9: BUCKET="$2"

10: else

11: BUCKET=openbedrock-repo

12: fi

13:

14: # create a temporary local repo and sync with AWS S3 repo

15: if test -e "$1"; then

16: cp "$1" ${repo}/noarch

17: createrepo $repo

18: # sync local repo with S3 bucket, make it PUBLIC

19: PERMISSION="--grants read=uri=http://acs.amazonaws.com/groups/global/AllUsers"

20: aws s3 sync --recursive --include="*" ${repo} s3://$BUCKET/ $PERMISSION

21: aws s3 ls s3://$BUCKET/

22: # cleanup local copy of repo

23: rm -rf $repo

24: fi

25:

Saturday, October 18, 2014

AWS with Bedrock

I'm starting to tinker with using Amazon's web services from Bedrock pages. Embedding AWS in Bedrock applications is not new - particularly if you are running Bedrock on an EC2 instance, RDS (hosted MySQL) or you are using Amazon's S3 object storage system. There are other Amazon web services that are particularly useful in any web development environment, exposing these to Bedrock pages looks interesting.

Plugin Bug

Fixed a bug in the <plugin> tag. Class names were not being parsed correctly if there was more that 1 level to the namespace.

For example, Amazon::SNS::VerifySignature was being incorrectly parsed as Amazon::SNS which happens to be valid Perl module.

Fix pushed to master.

For example, Amazon::SNS::VerifySignature was being incorrectly parsed as Amazon::SNS which happens to be valid Perl module.

Fix pushed to master.

Saturday, October 11, 2014

Amazon SNS - Verifying Signatures

While creating a Bedrock example of using Amazon's Simple Notification Service, I was surprised to find that Googling for a Perl example of verifying the signature from an SNS notification message came up empty. So I wrote my own...

Saturday, September 27, 2014

New Bedrock AMI on Amazon Cloud

It's easy to try Bedrock...spark up a t2.micro EC2 instance on Amazon's cloud using the new Bedrock AMI!

http://twiki.openbedrock.net/twiki/bin/view/Bedrock/BedrockAMI

http://twiki.openbedrock.net/twiki/bin/view/Bedrock/BedrockAMI

Subscribe to:

Posts (Atom)